What Are the Principal Design Issues That Have to Be Considered in Distributed Systems Engineering?

Introduction

Today's applications are marvels of distributed systems development. Each role or service that makes up

an application may be executing on a dissimilar system, based upon a different system compages, that is

housed in a different geographical location, and written in a dissimilar computer language. Components of

today's applications might exist hosted on a powerful arrangement carried in the owner's pocket and communicating

with application components or services that are replicated in data centers all over the world.

What's astonishing most this, is that individuals using these applications typically are not enlightened of the

complex surround that responds to their request for the local fourth dimension, local weather, or for directions to

their hotel.

Let's pull back the mantle and look at the industrial sorcery that makes this all possible and contemplate

the thoughts and guidelines developers should keep in listen when working with this complexity.

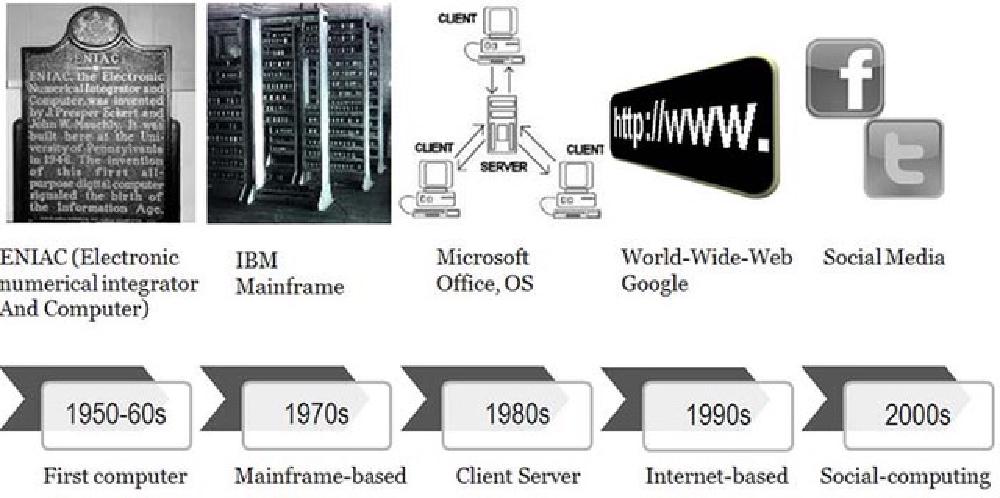

The Evolution of Organization Design

Figure 1: Evolution of system design over time

Source: Interaction Design Foundation, The

Social Blueprint of Technical Systems: Edifice technologies for communities

Application evolution has come a long way from the fourth dimension that programmers wrote out applications, manus

compiled them into the language of the automobile they were using, and so entered private machine

instructions and data directly into the figurer'due south memory using toggle switches.

As processors became more and more powerful, system memory and online storage capacity increased, and

computer networking capability dramatically increased, approaches to development also changed. Data tin now

be transmitted from one side of the planet to the other faster than it used to be possible for early

machines to move data from system retentiveness into the processor itself!

Let's look at a few highlights of this amazing transformation.

Monolithic Design

Early computer programs were based upon a monolithic design with all of the awarding components were

architected to execute on a unmarried machine. This meant that functions such every bit the user interface (if users

were really able to interact with the program), awarding rules processing, data direction, storage

management, and network management (if the computer was connected to a computer network) were all contained

inside the program.

While simpler to write, these programs get increasingly complex, difficult to document, and hard to update

or modify. At this time, the machines themselves represented the biggest cost to the enterprise and so

applications were designed to make the all-time possible use of the machines.

Client/Server Architecture

Equally processors became more than powerful, organization and online storage chapters increased, and data communications

became faster and more price-efficient, application blueprint evolved to lucifer pace. Application logic was

refactored or decomposed, allowing each to execute on dissimilar machines and the ever-improving networking

was inserted between the components. This allowed some functions to drift to the lowest toll computing

environment available at the time. The evolution flowed through the following stages:

Terminals and Final Emulation

Early distributed computing relied on special-purpose user admission devices chosen terminals. Applications had

to understand the communications protocols they used and effect commands directly to the devices. When

inexpensive personal computing (PC) devices emerged, the terminals were replaced past PCs running a terminal

emulation plan.

At this point, all of the components of the application were still hosted on a single mainframe or

minicomputer.

Light Customer

As PCs became more than powerful, supported larger internal and online storage, and network performance increased,

enterprises segmented or factored their applications then that the user interface was extracted and executed

on a local PC. The balance of the application continued to execute on a arrangement in the data center.

Often these PCs were less costly than the terminals that they replaced. They also offered additional

benefits. These PCs were multi-functional devices. They could run function productivity applications that

weren't bachelor on the terminals they replaced. This combination collection enterprises to move to

client/server application architectures when they updated or refreshed their applications.

Midrange Client

PC development continued at a rapid pace. In one case more powerful systems with larger storage capacities were

bachelor, enterprises took reward of them past moving even more processing away from the expensive systems

in the data heart out to the inexpensive systems on users' desks. At this betoken, the user interface and

some of the computing tasks were migrated to the local PC.

This immune the mainframes and minicomputers (now chosen servers) to have a longer useful life, thus

lowering the overall cost of computing for the enterprise.

Heavy client

As PCs become more and more powerful, more application functions were migrated from the backend servers. At

this point, everything but data and storage management functions had been migrated.

Enter the Internet and the World Broad Web

The public internet and the World Wide Web emerged at this fourth dimension. Client/server computing continued to be

used. In an attempt to lower overall costs, some enterprises began to re-architect their distributed

applications so they could use standard cyberspace protocols to communicate and substituted a web browser for

the custom user interface function. Later, some of the application functions were rewritten in Javascript so

that they could execute locally on the customer's estimator.

Server Improvements

Manufacture innovation wasn't focused solely on the user side of the communications link. A groovy deal of

comeback was made to the servers as well. Enterprises began to harness together the ability of many

smaller, less expensive industry standard servers to back up some or all of their mainframe-based functions.

This immune them to reduce the number of expensive mainframe systems they deployed.

Soon, remote PCs were communicating with a number of servers, each supporting their own component of the

awarding. Special-purpose database and file servers were adopted into the environs. Afterwards, other

application functions were migrated into application servers.

Networking was another expanse of intense industry focus. Enterprises began using special-purpose networking

servers that provided fire walls and other security functions, file caching functions to accelerate data

access for their applications, email servers, spider web servers, web application servers, distributed name servers

that kept rail of and controlled user credentials for data and application access. The listing of networking

services that has been encapsulated in an appliance server grows all the fourth dimension.

Object-Oriented Development

The rapid modify in PC and server capabilities combined with the dramatic price reduction for processing

power, memory and networking had a significant impact on application evolution. No longer where hardware

and software the biggest Information technology costs. The largest costs were communications, IT services (the staff), power,

and cooling.

Software development, maintenance, and IT operations took on a new importance and the evolution process was

inverse to reflect the new reality that systems were cheap and people, communications, and power were

increasingly expensive.

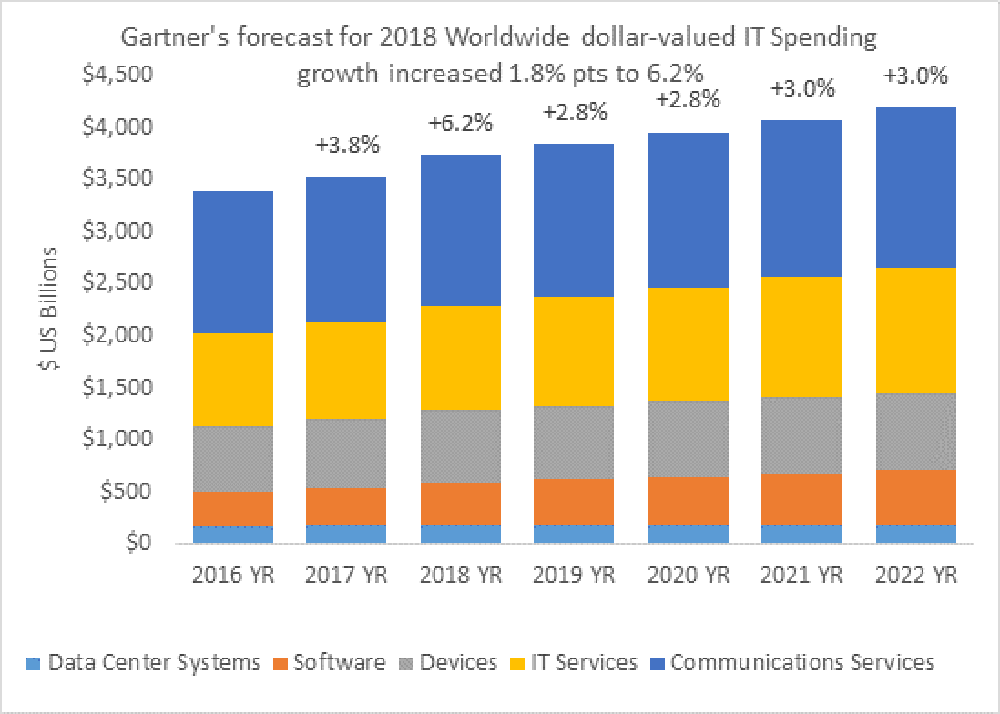

Effigy 2: Worldwide It spending forcast

Source: Gartner Worldwide Information technology

Spending Forecast, Q1 2018

Enterprises looked to improved data and awarding architectures as a manner to make the best apply of their

staff. Object-oriented applications and development approaches were the result. Many programming languages

such as the following supported this approach:

- C++

- C#

- COBOL

- Java

- PHP

- Python

- Ruby

Application developers were forced to conform by becoming more systematic when defining and documenting data

structures. This approach likewise made maintaining and enhancing applications easier.

Open-Source Software

Opensource.com offers the post-obit definition for open-source

software: "Open source software is software with source code that anyone tin can inspect, modify, and enhance."

Information technology goes on to say that, "some software has source code that only the person, team, or arrangement who

created it — and maintains sectional control over it — can modify. People call this kind of software

'proprietary' or 'closed source' software."

Just the original authors of proprietary software can legally re-create, inspect, and alter that software. And in

order to utilise proprietary software, computer users must concord (oft by accepting a license displayed the

showtime time they run this software) that they will not exercise anything with the software that the software's

authors have not expressly permitted. Microsoft Office and Adobe Photoshop are examples of proprietary

software.

Although open up-source software has been effectually since the very early days of computing, information technology came to the

forefront in the 1990s when complete open up-source operating systems, virtualization applied science, development

tools, database engines, and other important functions became available. Open-source technology is often a

critical component of web-based and distributed computing. Among others, the open up-source offerings in the

post-obit categories are popular today:

- Development tools

- Application support

- Databases (apartment file, SQL, No-SQL, and in-memory)

- Distributed file systems

- Message passing/queueing

- Operating systems

- Clustering

Distributed Computing

The combination of powerful systems, fast networks, and the availability of sophisticated software has driven

major awarding evolution away from monolithic towards more highly distributed approaches. Enterprises

have learned, notwithstanding, that sometimes it is meliorate to start over than to endeavor to refactor or decompose an

older application.

When enterprises undertake the attempt to create distributed applications, they often discover a few pleasant

side effects. A properly designed application, that has been decomposed into divide functions or services,

can be developed by separate teams in parallel.

Rapid awarding evolution and deployment, also known as DevOps, emerged equally a way to take advantage of the

new environment.

Service-Oriented Architectures

Equally the industry evolved beyond client/server computing models to an fifty-fifty more than distributed arroyo, the

phrase "service-oriented architecture" emerged. This approach was congenital on distributed systems concepts,

standards in message queuing and delivery, and XML messaging every bit a standard approach to sharing data and data

definitions.

Private awarding functions are repackaged as network-oriented services that receive a bulletin

requesting they perform a specific service, they perform that service, and then the response is sent back to

the role that requested the service.

This approach offers another benefit, the ability for a given service to be hosted in multiple places around

the network. This offers both improved overall performance and improved reliability.

Workload direction tools were developed that receive requests for a service, review the bachelor capacity,

forrad the request to the service with the about available capacity, and then ship the response back to the

requester. If a specific service doesn't respond in a timely way, the workload manager just forwards

the request to another instance of the service. It would as well mark the service that didn't respond as failed

and wouldn't send additional requests to it until it received a message indicating that it was still live

and salubrious.

What Are the Considerations for Distributed Systems

At present that we've walked through over 50 years of calculating history, let'south consider some rules of thumb for

developers of distributed systems. There's a lot to think about because a distributed solution is likely to

have components or services executing in many places, on dissimilar types of systems, and letters must be

passed back and forth to perform work. Care and consideration are absolute requirements to be successful

creating these solutions. Expertise must too be available for each type of host system, evolution tool,

and messaging system in use.

Nailing Down What Needs to Exist Washed

1 of the first things to consider is what needs to be accomplished! While this sounds simple, it's

incredibly important.

It's astonishing how many developers starting time building things before they know, in detail, what is needed. Often,

this means that they build unnecessary functions and waste their time. To quote Yogi Berra, "if yous don't

know where you are going, you'll end up someplace else."

A good place to start is knowing what needs to be done, what tools and services are already bachelor, and

what people using the final solution should encounter.

Interactive Versus Batch

Since fast responses and low latency are often requirements, it would be wise to consider what should exist done

while the user is waiting and what can be put into a batch process that executes on an event-driven or

fourth dimension-driven schedule.

After the initial segmentation of functions has been considered, it is wise to plan when background, batch

processes need to execute, what data do these functions dispense, and how to brand sure these functions are

reliable, are available when needed, and how to forbid the loss of data.

Where Should Functions Be Hosted?

Merely after the "what" has been planned in fine detail, should the "where" and "how" exist considered. Developers

have their favorite tools and approaches and frequently will invoke them even if they might not exist the best

choice. Every bit Bernard Baruch was reported to say, "if all you accept is a hammer, everything looks like a blast."

It is also important to be enlightened of corporate standards for enterprise evolution. Information technology isn't wise to select a

tool simply because it is pop at the moment. That tool simply might do the task, but remember that

everything that is built must be maintained. If you build something that only you can understand or

maintain, you lot may just accept tied yourself to that function for the rest of your career. I have personally

created functions that worked properly and were small and reliable. I received phone calls regarding

these for ten years after I left that visitor because subsequently developers could not empathize how the

functions were implemented. The documentation I wrote had been lost long earlier.

Each function or service should exist considered separately in a distributed solution. Should the function exist

executed in an enterprise information center, in the data heart of a cloud services provider or, mayhap, in both.

Consider that there are regulatory requirements in some industries that direct the option of where and

how information must exist maintained and stored.

Other considerations include:

- What type of system should be the host of that part. Is i system architecture amend for that

function? Should the organisation be based upon ARM, X86, SPARC, Precision, Power, or even be a Mainframe? - Does a specific operating system provide a better computing environment for this office? Would Linux,

Windows, UNIX, System I, or fifty-fifty Organisation Z exist a better platform? - Is a specific evolution linguistic communication better for that part? Is a specific type of data direction tool?

Is a Flat File, SQL database, No-SQL database, or a non-structured storage mechanism better? - Should the function be hosted in a virtual machine or a container to facilitate office mobility,

automation and orchestration?

Virtual machines executing Windows or Linux were oftentimes the option in the early 2000s. While they offered

significant isolation for functions and made information technology hands possible to restart or motility them when necessary,

their processing, retentiveness and storage requirements were rather high. Containers, another approach to

processing virtualization, are the emerging option today because they offer like levels of isolation, the

ability to restart and migrate functions and eat far less processing power, retentivity or storage.

Functioning

Performance is another disquisitional consideration. While defining the functions or services that brand upward a

solution, the developers should be aware if they have meaning processing, memory or storage

requirements. It might be wise to look at these functions closely to learn if that can exist farther subdivided

or decomposed.

Farther division would allow an increment in parallelization which would potentially offering performance

improvements. The trade off, of course, is that this approach also increases complexity and, potentially,

makes them harder to manage and to brand secure.

Reliability

In loftier stakes enterprise environments, solution reliability is essential. The developer must consider when

it is adequate to strength people to re-enter data, re-run a function, or when a function can be unavailable.

Database developers ran into this issue in the 1960s and developed the concept of an diminutive function. That

is, the function must complete or the partial updates must exist rolled back leaving the data in the land information technology

was in before the function began. This same mindset must be applied to distributed systems to ensure that

data integrity is maintained even in the event of service failures and transaction disruptions.

Functions must be designed to totally consummate or roll dorsum intermediate updates. In disquisitional message passing

systems, messages must be stored until an acknowledgement that a bulletin has been received comes in. If such

a bulletin isn't received, the original message must exist resent and a failure must be reported to the

management system.

Manageability

Although non as much fun to consider as the core application functionality, manageability is a key factor in

the ongoing success of the application. All distributed functions must exist fully instrumented to allow

administrators to both understand the current state of each office and to change office parameters if

needed. Distributed systems, afterward all, are constructed of many more moving parts than the monolithic

systems they replace. Developers must be constantly aware of making this distributed calculating environs

easy to employ and maintain.

This brings u.s. to the accented requirement that all distributed functions must be fully instrumented to permit

administrators to sympathize their current state. Later on all, distributed systems are inherently more circuitous

and have more moving parts than the monolithic systems they replace.

Security

Distributed organization security is an club of magnitude more difficult than security in a monolithic

environment. Each part must exist made secure separately and the communication links betwixt and among the

functions must also be made secure. As the network grows in size and complexity, developers must consider

how to control admission to functions, how to make sure than just authorized users can access these function,

and to to isolate services from one other.

Security is a critical element that must be built into every function, not added on subsequently. Unauthorized

access to functions and data must be prevented and reported.

Privacy

Privacy is the discipline of an increasing number of regulations effectually the world. Examples like the European

Union's GDPR and the U.S. HIPPA regulations are important considerations for whatever developer of

customer-facing systems.

Mastering Complexity

Developers must accept the time to consider how all of the pieces of a circuitous computing environment fit

together. It is difficult to maintain the discipline that a service should encapsulate a single function or,

mayhap, a small number of tightly interrelated functions. If a given part is implemented in multiple

places, maintaining and updating that office can be difficult. What would happen when i instance of a

part doesn't become updated? Finding that error can be very challenging.

This means it is wise for developers of circuitous applications to maintain a visual model that shows where each

function lives and then it can exist updated if regulations or business organization requirements modify.

Often this means that developers must take the fourth dimension to document what they did, when changes were made, as

well every bit what the changes were meant to achieve so that other developers aren't forced to decipher mounds

of text to acquire where a function is or how it works.

To be successful as a builder of distributed systems, a developer must be able to principal complication.

Approaches Developers Must Main

Developers must master decomposing and refactoring awarding architectures, thinking in terms of teams, and

growing their skill in approaches to rapid application evolution and deployment (DevOps). Subsequently all, they

must be able to recollect systematically about what functions are independent of one another and what functions

rely on the output of other functions to piece of work. Functions that rely upon ane other may be best implemented as

a single service. Implementing them as contained functions might create unnecessary complexity and event

in poor application performance and impose an unnecessary burden on the network.

Virtualization Applied science Covers Many Bases

Virtualization is a far bigger category than just virtual machine software or containers. Both of these

functions are considered processing virtualization engineering. There are at least seven different types of

virtualization technology in use in mod applications today. Virtualization technology is available to

enhance how users access applications, where and how applications execute, where and how processing happens,

how networking functions, where and how data is stored, how security is implemented, and how management

functions are accomplished. The following model of virtualization technology might exist helpful to developers

when they are trying to get their arms around the concept of virtualization:

Effigy 3: Architure of virtualized systems

Source: 7 Layer Virtualizaiton Model, VirtualizationReview.com

Think of Software-Divers Solutions

It is also important for developers to think in terms of "software defined" solutions. That is, to segment

the control from the actual processing so that functions tin be automated and orchestrated.

Developers shouldn't feel like they are on their own when wading into this complex globe. Suppliers and

open-source communities offer a number of powerful tools. Diverse forms of virtualization technology tin can be

a developer's all-time friend.

Virtualization Technology Can Be Your All-time Friend

- Containers make information technology possible to easily develop functions that can execute without

interfering with one another and can be migrated from system to system based upon workload demands. - Orchestration technology makes it possible to command many functions to ensure they are

performing well and are reliable. It tin also restart or move them in a failure scenario. - Supports incremental evolution: functions tin can be developed in parallel and deployed

equally they are ready. They also tin be updated with new features without requiring changes elsewhere. - Supports highly distributed systems: functions tin be deployed locally in the

enterprise information center or remotely in the data centre of a deject services provider.

Retrieve In Terms of Services

This means that developers must remember in terms of services and how services can communicate with one some other.

Well-Defined APIs

Well divers APIs mean that multiple teams can work simultaneously and nonetheless know that everything will fit

together every bit planned. This typically means a bit more piece of work up front, but it is well worth it in the end. Why?

Because overall development can be faster. It also makes documentation easier.

Support Rapid Application Development

This arroyo is also perfect for rapid application development and rapid prototyping, as well known as DevOps.

Properly executed, DevOps besides produces rapid fourth dimension to deployment.

Think In Terms of Standards

Rather than relying on a unmarried vendor, the developer of distributed systems would be wise to think in terms

of multi-vendor, international standards. This approach avoids vendor lock-in and makes finding expertise

much easier.

Summary

It's interesting to note how guidelines for rapid awarding development and deployment of distributed

systems start with "take your time." Information technology is wise to program out where you lot are going and what yous are going to do

otherwise y'all are probable to end upwards somewhere else, having burned through your evolution upkeep, and take

little to show for it.

Sign up for Online Training

To go along to larn almost the tools, technologies, and practices in the modern development landscape, sign up for free online training sessions. Our engineers host

weekly classes on Kubernetes, containers, CI/CD, security, and more than.

gonzaleswidefirearm.blogspot.com

Source: https://www.suse.com/c/rancher_blog/considerations-when-designing-distributed-systems/

0 Response to "What Are the Principal Design Issues That Have to Be Considered in Distributed Systems Engineering?"

Post a Comment